Background summary

This article explains how a healthcare data team secured PII/PHI in an Azure Databricks Lakehouse using Medallion Architecture. It covers encryption at rest and in transit, column-level encryption, data masking, Unity Catalog policies, 3NF normalization for RTBF, and compliance anchors for HIPAA and CCPA.

Introduction

In healthcare, trust starts with how you protect patient data. Every lab result, claim, and encounter add to a record that links back to a person. If that link leaks, the cost is more than penalties. It affects patient confidence and care coordination.

In 2024, U.S. healthcare reported 725 large breaches, and PHI for more than 276 million people was exposed. That is an average of over 758,000 healthcare records breached per day, which shows how urgent this problem has become.

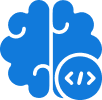

With cloud analytics and healthcare data lakes now standard, teams must protect Personally Identifiable Information (PII) and Protected Health Information (PHI) through the entire pipeline while meeting HIPAA, CCPA, and other rules.

This article shows how we secured PII/PHI on Azure Databricks using column-level encryption, data masking, Fernet with Azure Key Vault, and Medallion Architecture across Bronze, Silver, and Gold layers. The goal is simple. Keep data useful for analytics, but safe for patients and compliant for auditors. Microsoft and Databricks outline the technical controls for HIPAA workloads, including encryption at rest, in transit, and governance.

The challenge: securing PII/PHI in a cloud data lake

Healthcare data draws attackers because it contains identity and clinical context. The largest U.S. healthcare breach to date affected about 192.7 million people through a single vendor incident, and it disrupted claims at a national scale. The lesson for data leaders is clear. You must plan for data loss, lateral movement, and recovery, not only for perimeter events.

Our needs were twofold:

- Data security

Protect PII/PHI as it moves from ingestion to analytics and machine learning. - Compliance

Meet HIPAA, CCPA, and internal standards without slowing down reporting.

We adopted end-to-end encryption and column-level security and enforced them per layer using Medallion Architecture:

Bronze

Raw, encrypted data with rich lineage and tags.

Silver

Cleaned, standardized, 3NF-normalized data with PII columns clearly marked.

Gold

Aggregated, masked datasets for BI and data science, with policy-driven access and role-based access control.

For scale, we added Unity Catalog controls and policy objects that apply at schema, table, column, and function levels. This helps enforce row filters and column masks without custom code in every job.

Protecting PII/PHI: encryption at every stage

We used three layers of protection so PII/PHI stays safe and still usable.

Encryption in transit

Data travels over TLS from sources to Azure Databricks. For cluster internode traffic, Databricks supports encryption using AES-256 over TLS 1.3 through init scripts when needed. This reduces exposure during shuffle or broadcast.

Encryption at rest

Raw data in Bronze and refined data in Silver/Gold stay encrypted at rest with AES-256 using Azure storage service encryption. Azure’s model follows envelope encryption and supports FIPS 140-2 validated algorithms. This satisfies common control requirements for HIPAA encryption standards and workloads.

Column-level encryption

This is the last mile. We encrypted specific fields that contain PII/PHI.

- Identify sensitive columns. With data owners and compliance teams, we tagged names, contact details, SSNs, MRNs, and any content that can re-identify a person.

- Fernet UDFs on Azure Databricks. We used Fernet in a User-Defined Function so encryption is non-deterministic. The same input encrypts to different outputs, which reduces linking risk across tables.

- Azure Key Vault for key management. We stored encryption keys in Azure Key Vault and used Databricks secrets for retrieval. We set rotation, separation of duties, and least privilege to keep access tight. Microsoft documents customer-managed key options for the control plane and data plane.

Together, these patterns form our Azure Databricks PII encryption approach and support HIPAA control mapping.

Identifying PII in healthcare data: a collaborative and automated approach

PII storage

- Collaboration with business teams

Subject-matter experts show which fields matter most for care and billing. They confirm what counts as PII/PHI by dataset and by jurisdiction, since a payer file and an EHR table carry different fields and retention rules. We document these rules in a data catalog entry and bind them to Unity Catalog policies. - Automated Python scripts for data profiling

Our scripts look for regex patterns, outliers, and value density that point to contact info or identifiers. We score each column for PII likelihood and tag it at ingestion. We also write the score and the supporting evidence to the catalog. That way, audits can see when we marked a column and why. - Analyzing nested data for sensitive information

Clinical feeds often arrive as JSON or XML with nested groups. We flatten with stable keys, then scan inner nodes. We also search free-text fields for names or IDs. The same rules apply: detect, tag, then protect. - What we do with tags

Tags flow into policies for masking, access control, and key selection. This reduces manual steps and keeps rules consistent as teams add new feeds.

This practice underpins data governance in healthcare and makes PII/PHI classification repeatable.

SCD-2 and data masking for compliance

Healthcare keeps history for clinical and financial reasons. That means Slowly Changing Dimensions (SCD-2) and audit trails.

SCD-2 with MD5 hashing and fernet encryption

We create stable MD5 hashes for record identity and apply Fernet to the sensitive attributes. Hashing preserves version tracking. Encryption keeps versions private. When downstream jobs need to join on keys, they can rely on the hash while leaving names and contact details protected.

Data masking for specific user groups

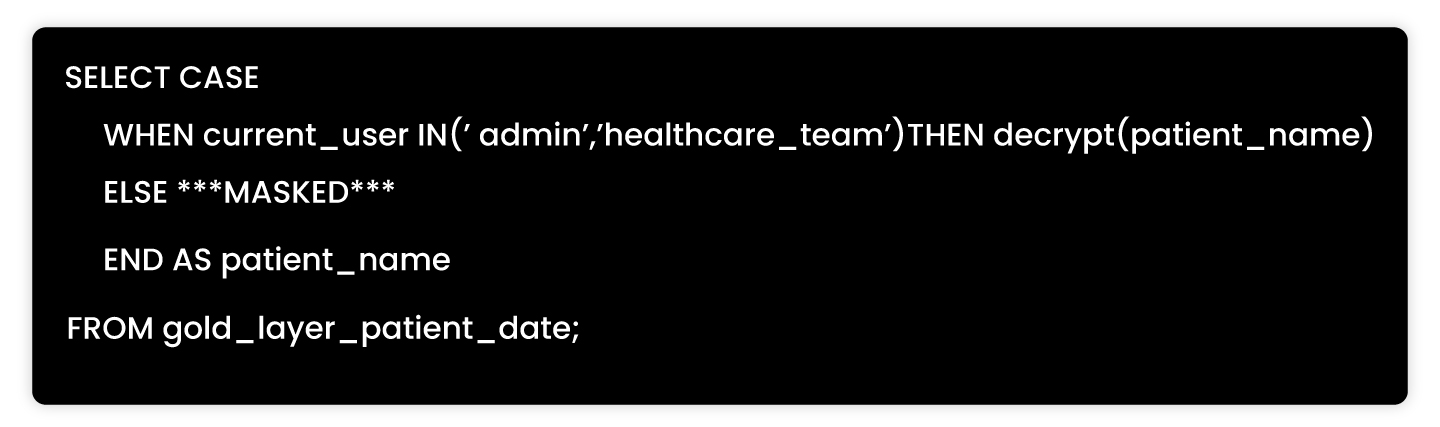

In Gold, we apply policy-based masking. Analysts who need names for outreach can see decrypted values. Others see tokens. We keep the policy in Unity Catalog as a column mask and reference the same function from SQL. Databricks documents row filters and column masks for this purpose.

Masking function steps

- Define roles and permissions

Map roles to the minimum fields needed. - Apply masking functions

Use a CASE expression or a catalog mask to return masked or decrypted values based on role. - Log access

Write grants and exceptions to an audit table so you can answer who saw what and when.

Example of masking in SQL

This is the core of our data masking and compliance solutions.

Managing CCPA and GDPR requests: right to be forgotten (RTBF)

GDPR and CCPA give people the Right to be Forgotten. Azure and Databricks document how to prepare data, set deadlines, and confirm erasure. We designed for this from day one. Microsoft Learn+1

Data normalization and separation

We encrypt PII at ingestion into Bronze, then normalize to 3NF in Silver. This separates PII from facts so we can delete or anonymize a person’s data without breaking joins. When a request arrives, we touch fewer tables and keep referential integrity intact.

GDPR/CCPA control table

We keep a table for requests with user ID, request type, received date, deadline, and status. We attach actions to a runbook so ops can trace evidence in audits.

Challenges in managing delete requests

- Data coupling

PII often sits close to clinical history and billing lines. If you delete a name in one table, you may create orphans in another. The 3NF split keeps link keys active while PII columns are removed or anonymized. - Data redundancy

The same PII appears in multiple layers and extracts. A single job must sweep Bronze, Silver, and Gold, plus exports.

Why 3NF?

- Separation of PII. Deleting in the PII table does not break unrelated metrics.

- Efficient deletion. Scoping the change to one place avoids full-dataset churn.

- Auditable control. The request table and job logs prove action within the required time window. Databricks explains timelines for RTBF and patterns for Delta Lake deletes. Microsoft LearnDatabricks

Automated deletion process

- Bronze layer. Delete or overwrite the raw files related to the user.

- Silver layer. Remove records by user_id and anonymize linked attributes.

- Gold layer. Delete or mask as needed, then re-build derived views.

This pipeline supports right to be forgotten workflows and keeps reporting stable.

Compliance considerations and final thoughts

We tied our controls to key standards.

- HIPAA on Azure Databricks

Databricks provides a compliance security profile for PHI workloads. It adds hardened images, monitoring agents, and limits preview features that do not meet the profile. You still own your compliance program, but the platform closes common gaps. - Encryption controls

Azure documents AES-256 encryption at rest and supports customer-managed keys for the control plane and DBFS. This helps you prove key ownership and rotation. - Governance controls

Unity Catalog centralizes access controls, masks, and row filters so policies live with the data. This reduces manual steps as teams add domains and products. - Risk context

Large breaches continue across the sector, including the UnitedHealth incident that reached about 192.7 million people. Breach volumes remained high in 2024 and 2025, which is why encryption, masking, and RTBF must work together.

Our architecture keeps PII/PHI private at every stage and still lets analysts get their work done. It protects patient trust and gives auditors the evidence they expect.

Conclusion: a secure and compliant data lake for healthcare

Securing PII and PHI in a cloud-based data lake calls for clear rules and consistent automation. We used Medallion Architecture, column-level encryption with Fernet, Azure Key Vault for keys, policy-based masking in Gold, and RTBF workflows tied to 3NF design. The result is a system that resists breach impact, supports HIPAA and CCPA, and maintains data utility.

Frequently asked questions

- Why use column-level encryption when storage is already encrypted?

Storage encryption protects files and disks. Column-level encryption protects specific fields inside tables so only approved users or jobs can decrypt them. It lowers the blast radius if a table is exposed. Microsoft and Databricks document how to combine both layers. - What makes Fernet suitable for PII/PHI?

Fernet provides authenticated, non-deterministic encryption. Identical inputs produce different outputs, which reduces linking and re-identification. It is simple to wrap in UDFs for Spark jobs. - How does Medallion Architecture help with compliance?

It splits the pipeline into Bronze, Silver, and Gold so you can apply encryption, masking, and access at the right point. It also improves reproducibility, which audits require. - How do Unity Catalog masks and filters work in practice?

You define a mask or filter once and attach it to a column or table. Every query sees the rule. This centralizes enforcement and keeps your SQL simple. - What is required for GDPR/CCPA RTBF in a lakehouse?

You need a request log, deadlines, repeatable deletes, and a normalized design so you erase PII without breaking facts. Microsoft and Databricks outline timelines and patterns to execute RTBF. - Are these controls enough to stop breaches?

Nothing stops every breach, but encryption, masking, governance, and RTBF reduce impact and speed recovery. The recent record breach shows why layered controls matter.